Research

Eye Gaze Tracking

Eye gaze is an important functional component in various applications, as it indicates human attentiveness and can thus be used to study their intentions and understand social interactions. For these reasons, accurately estimating gaze is an active research topic in computer vision, with applications in affect analysis, saliency detection and action recognition, to name a few. Gaze estimation has also been applied in domains other than computer vision, such as navigation for eye gaze controlled wheelchairs, detection of non-verbal behaviors of drivers, and inferring the object of interest in human-robot interactions.

Research Output: [RT-GENE(ECCV2018)], [CVPRW’22]

Researchers: Hengfei and Nora

Hand and Object Interaction Modeling

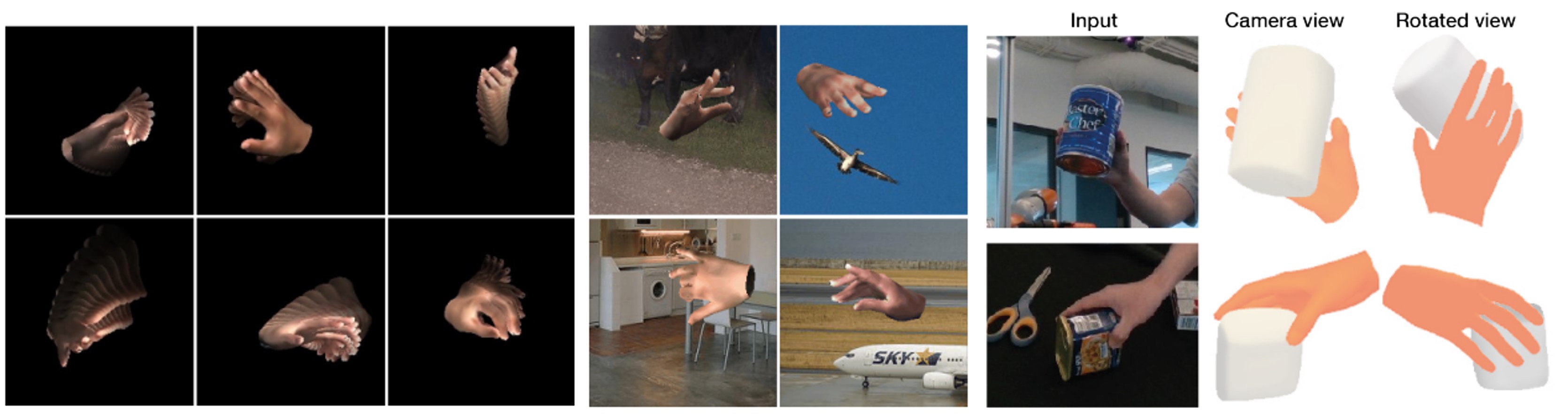

Estimating the pose and shape of hands and objects under interaction finds numerous applications including augmented and virtual reality. Existing approaches for hand and object reconstruction require explicitly defined physical constraints and known objects, which limits its application domains. Our algorithm is agnostic to object models, and it learns the physical rules governing hand-object interaction. This requires automatically inferring the shapes and physical interaction of hands and (potentially unknown) objects. We seek to approach this challenging problem by proposing a collaborative learning strategy where two-branches of deep networks are learning from each other. Specifically, we transfer hand mesh information to the object branch and vice versa for the hand branch. The resulting optimisation (training) problem can be unstable, and we address this via two strategies: (i) attention-guided graph convolution which helps identify and focus on mutual occlusion and (ii) unsupervised associative loss which facilitates the transfer of information between the branches. Experiments using four widely-used benchmarks show that our framework achieves beyond state-of-the-art accuracy in 3D pose estimation, as well as recovers dense 3D hand and object shapes. Each technical component above contributes meaningfully in the ablation study.

Research Output: [CVPR’22], [SeqHAND(ECCV’22)], [CVPR’14], [TPAMI’16]

Researchers: Elden and Zhongqun

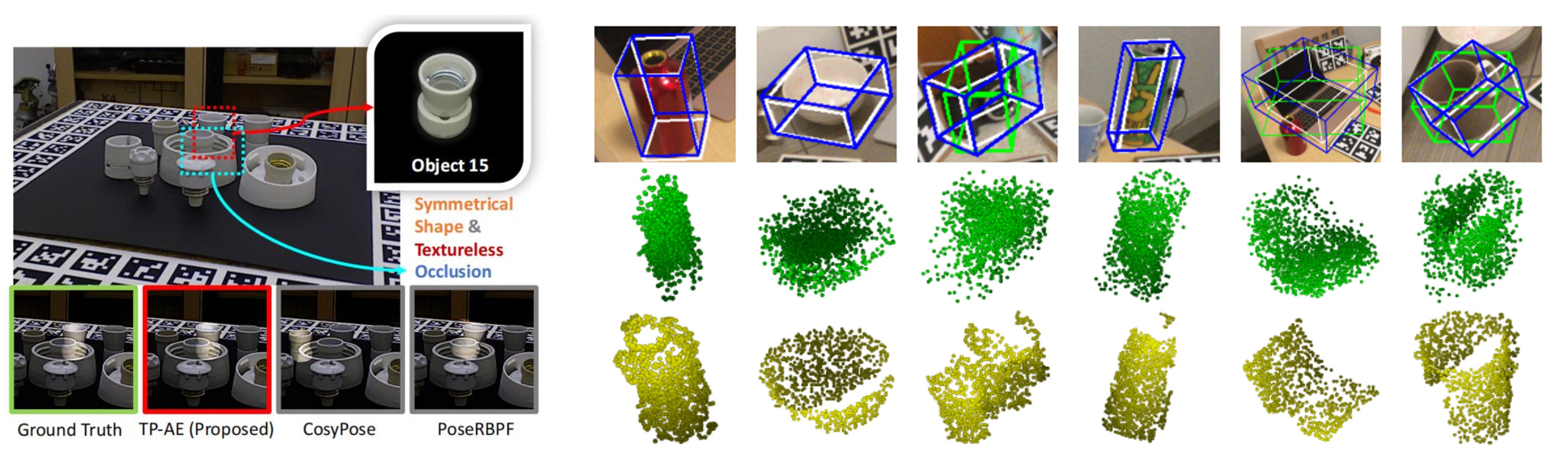

6D Object Pose Estimation

Fast and accurate tracking of an object’s motion is one of the key functionalities of a robotic system for achieving reliable interaction with the environment. This paper focuses on the instance-level six-dimensional (6D) pose tracking problem with a symmetric and textureless object under occlusion. We propose a Temporally Primed 6D pose tracking framework with Auto-Encoders (TP-AE) to tackle the pose tracking problem. The framework consists of a prediction step and a temporally primed pose estimation step. The prediction step aims to quickly and efficiently generate a guess on the object’s real-time pose based on historical information about the target object’s motion. Once the prior prediction is obtained, the temporally primed pose estimation step embeds the prior pose into the RGB-D input, and leverages auto-encoders to reconstruct the target object with higher quality under occlusion, thus improving the framework’s performance. Extensive experiments show that the proposed 6D pose tracking method can accurately estimate the 6D pose of a symmetric and textureless object under occlusion, and significantly outperforms the state-of-the-art on T-LESS dataset while running in real-time at 26 FPS.

Research Output: [TP-AE(ICRA’22)], [FS-Net(CVPR’21 Oral)], [G2L-Net(CVPR’20)], [PPNet(WACV’20)]

Researchers: Linfang and Wei

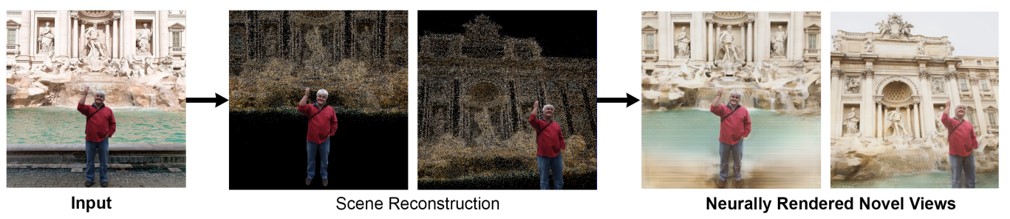

Neural Radiance Field (NeRF)

Imagine you have a photo of yourself where everything is perfect except the camera angle. Perhaps the photographer cut off the top of the attraction you were standing in front of, or you wished the photo had a wider field-of-view. The best course of action to correct this would be to retake the photo immediately after looking at the outcomes, but this is not always possible, especially for touristic locations, which are often the photos that you care about a lot. Moreover, in situations where there is no “second try”, for example when your baby walks for the first time, a re-take is not an option.

Research Output: [WACV’22]

Researchers: Jonathan and Hengfei

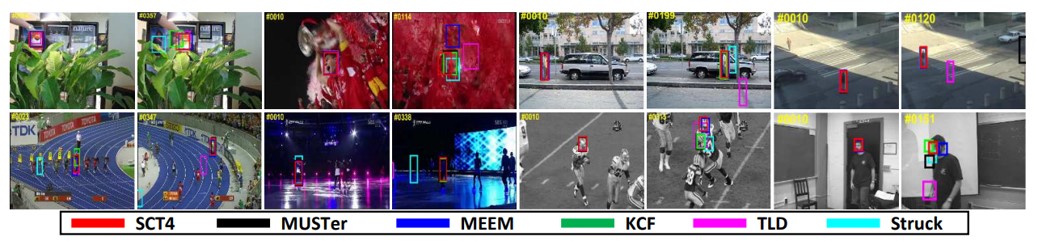

Visual Object Tracking

Key to realizing the vision of human-centred computing is the ability for machines to recognize people, so that spaces and devices can become truly personalized. However, the unpredictability of real-world environments impacts robust recognition, limiting usability. In real conditions, human identification systems have to handle issues such as out-of-set subjects and domain deviations, where conventional supervised learning approaches for training and inference are poorly suited. With the rapid development of Internet of Things (IoT), we advocate a new labelling method that exploits signals of opportunity hidden in heterogeneous IoT data. The key insight is that one sensor modality can leverage the signals measured by other co-located sensor modalities to improve its own labelling performance. If identity associations between heterogeneous sensor data can be discovered, it is possible to automatically label data, leading to more robust human recognition, without manual labelling or enrolment. On the other side of the coin, we also study the privacy implication for such cross-modal identity association.

Research Output: [CVPR’18], [CVPR’17], [CVPR’16], [CVPR’13]

Researches: Jinyu and Zhongqun